Pandas Basics for ML

- 강의자료 다운로드: click me

Learning Objectives

- Understand the role of Pandas in machine learning workflows

- Learn how to create and use Series and DataFrame

- Practice data import, inspection, and basic manipulation

- Handle missing values and perform simple summary statistics

- Build a foundation for data preprocessing before applying machine learning models

1. Introduction to Pandas

In data analysis and machine learning, handling raw data effectively is often more critical than the choice of the learning algorithm itself. Real-world datasets are rarely clean; they typically include missing values, mixed data types, and structural irregularities. While Python’s built-in data structures and NumPy arrays are powerful for numerical computation, they are not designed to manage labeled, heterogeneous, and tabular data efficiently. To address this gap, the Pandas library was introduced.

Pandas, developed by Wes McKinney in 2008, provides high-level data structures and operations that simplify the process of manipulating structured data. Its core strength lies in two fundamental data structures: the Series and the DataFrame. Unlike plain arrays, these structures incorporate labeled indexing, enabling more intuitive and flexible data handling.

The advantages of Pandas can be summarized as follows.

First, it allows intuitive data manipulation with concise and readable syntax.

Second, it integrates seamlessly with other scientific computing libraries such as NumPy, Matplotlib, and scikit-learn, making it highly versatile for end-to-end workflows.

Third, it offers built-in functionality for data cleaning, transformation, grouping, and statistical summarization, thereby supporting the entire exploratory data analysis (EDA) pipeline.

Nevertheless, Pandas is not without limitations. As an in-memory library, its performance degrades significantly with very large datasets that exceed available RAM. For large-scale or distributed data processing, specialized frameworks such as Apache Spark or Dask are required.

In the context of machine learning, Pandas plays a foundational role. Tasks such as importing data from CSV or Excel files, inspecting variables, handling missing values, and engineering new features are typically performed using Pandas before passing the processed data to machine learning libraries.

The two key data structures provided by Pandas are:

-

Series: A one-dimensional labeled array, conceptually similar to a column in a spreadsheet or a single field in a database table. Each element is associated with an index label.

-

DataFrame: A two-dimensional labeled data structure composed of multiple Series aligned by a common index. It is analogous to an entire spreadsheet or a relational database table, with rows representing records and columns representing variables.

Pandas is not merely a convenience tool but a fundamental component of the modern data science and machine learning ecosystem. By bridging the gap between raw data and model-ready input, it provides the analytical foundation upon which subsequent computational methods can operate effectively.

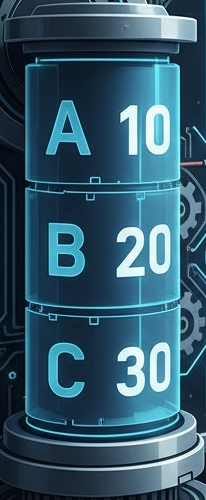

Series

Definition

A Series in Pandas is a one-dimensional labeled array that can hold data of any type (integers, floats, strings, objects). Conceptually, it is similar to a single column in a spreadsheet or a single field in a database table. Each value in a Series is associated with an index, which serves as a label for referencing and aligning the data. The index can be either default (0, 1, 2, …) or explicitly defined by the user.

Creating a Series

A Series can be created from a Python list, NumPy array, or dictionary. The following example demonstrates creating a simple Series from a list while specifying custom index labels.

import pandas as pd

# Create a Series with explicit index labels

data = pd.Series([10, 20, 30], index=["A", "B", "C"])

print(data)

Execution Result:

A 10

B 20

C 30

dtype: int64

Explanation

In this example:

The values are [10, 20, 30].

The corresponding indices are "A", "B", and "C".

The output also shows the data type (int64), which indicates that all values are integers.

This labeled structure makes it possible to access values not only by position but also by their assigned labels.

The index and values attributes can be used to extract the index labels and the stored values separately.

Access index labels

print(data.index)

Access values

print(data.values)

Execution Result:

Index(['A', 'B', 'C'], dtype='object')

[10 20 30]

Interpretation

-

data.indexreturns the labels of the Series ('A','B','C'). -

data.valuesreturns the underlying NumPy array of values ([10, 20, 30]).

This separation of labels and values is what makes Pandas Series more flexible than a simple NumPy array, as it allows for intuitive data alignment, indexing, and manipulation.

DataFrame

- DataFrame 생성

dictlistofdictsCSVimport

.head(),.info(),.describe()활용- 예제 코드 + 실행 결과 + 해석

Definition

A DataFrame is a two-dimensional, labeled data structure composed of columns of potentially different types. It can be constructed from a variety of Python-native objects and external files.

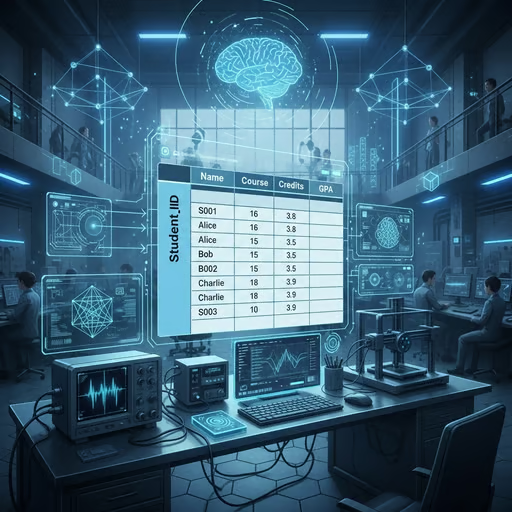

Creating a DataFrame from a dict

A dictionary maps column names → sequences of values. All columns must have the same length.

import pandas as pd

data_dict = {

"Name": ["Alice", "Bob", "Charlie"],

"Age": [25, 30, 35],

"Score": [85, 90, 95]

}

df_from_dict = pd.DataFrame(data_dict)

print(df_from_dict)

Execution Result:

Name Age Score

0 Alice 25 85

1 Bob 30 90

2 Charlie 35 95

Explanation

Keys become column labels ("Name", "Age", "Score").

The default row index is integer-based (0, 1, 2).

Each column can hold a different dtype (string, int, etc.).

Creating a DataFrame from a list of dict

A DataFrame can also be constructed from a list of Python dictionaries, where each dictionary corresponds to one row of the DataFrame.

The keys of the dictionary represent column names, and the values represent the data for that row.

If a key is missing in any dictionary, Pandas automatically fills the corresponding cell with a missing value (NaN).

import pandas as pd

# Each dictionary represents a row

rows = [

{"Name": "Alice", "Age": 25, "Score": 85},

{"Name": "Bob", "Age": 30, "Score": 90},

{"Name": "Dana", "Score": 88} # Age is missing here

]

df_lod = pd.DataFrame(rows)

print(df_lod)

Execution Result:

Name Age Score

0 Alice 25.0 85

1 Bob 30.0 90

2 Dana NaN 88

Explanation

Each dictionary in the list becomes a row in the DataFrame.

The union of all keys across dictionaries defines the columns.

Missing keys are represented as NaN (Not a Number), indicating missing data.

Pandas automatically aligns data based on the dictionary keys, making this method useful for heterogeneous or incomplete datasets.

Creating a DataFrame by importing a CSV file

In practice, datasets are often stored in CSV (Comma-Separated Values) format. Pandas provides the read_csv() function to easily load such files into a DataFrame. By default, the first line of the file is treated as the header, and each subsequent line corresponds to a row of data.

Suppose we have a file named 05_sample_students.csv with the following contents:

Name,Age,Score

Alice,25,85

Bob,30,90

Charlie,35,95

- Download

05_sample_students.csv: click me

We can load this CSV file as follows:

import pandas as pd

# Load the CSV file into a DataFrame

df_csv = pd.read_csv("05_sample_students.csv")

print(df_csv)

Execution Result:

Name Age Score

0 Alice 25 85

1 Bob 30 90

2 Charlie 35 95

Explanation

pd.read_csv("sample_students.csv") reads the file and constructs a DataFrame.

The first row is used as column headers ("Name", "Age", "Score").

Each subsequent line becomes a row in the DataFrame.

Pandas automatically assigns a default integer index (0, 1, 2, …).

If a column should be used as the index, the parameter index_col can be specified:

df_csv_indexed = pd.read_csv("sample_students.csv", index_col="Name")

print(df_csv_indexed)

Execution Result:

Age Score

Name

Alice 25 85

Bob 30 90

Charlie 35 95

Here, the "Name" column is used as the row index, which can be useful when the column uniquely identifies each record.

Using .head(), .info(), and .describe()

Once a DataFrame is created, it is often necessary to quickly inspect its structure and contents. Pandas provides several convenient methods for this purpose:

.head(n): Displays the first n rows (default is 5). Useful for quickly checking the beginning of a dataset..info(): Prints a concise summary including column names, non-null counts, and data types..describe(): Generates descriptive statistics (count, mean, std, min, quartiles, max) for numerical columns.

Generate & Save Example Dataset

The following code generates a CSV file named 05_random_students.csv with 1000 rows for demonstration. Each row represents a student with random age and scores.

import pandas as pd

import numpy as np

# Generate random dataset

np.random.seed(42)

n = 1000

data = {

"StudentID": range(1, n+1),

"Age": np.random.randint(18, 30, size=n),

"MathScore": np.random.randint(50, 100, size=n),

"EnglishScore": np.random.randint(50, 100, size=n),

"ScienceScore": np.random.randint(50, 100, size=n)

}

df = pd.DataFrame(data)

# Save to CSV

df.to_csv("05_random_students.csv", index=False)

- Download

05_random_students.csv: click me

Loading the CSV File

import pandas as pd

# Load the dataset

df = pd.read_csv("05_sample_students.csv")

# Show the first 5 rows

print(df.head())

Execution Result (sample):

StudentID Age MathScore EnglishScore ScienceScore

0 1 24 88 61 58

1 2 28 51 92 73

2 3 28 89 74 92

3 4 23 83 87 72

4 5 27 94 66 87

Inspecting the DataFrame with .info()

print(df.info())

Execution Result:

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1000 entries, 0 to 999

Data columns (total 5 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 StudentID 1000 non-null int64

1 Age 1000 non-null int64

2 MathScore 1000 non-null int64

3 EnglishScore 1000 non-null int64

4 ScienceScore 1000 non-null int64

dtypes: int64(5)

memory usage: 39.2 KB

.info() confirms the dataset has 1000 rows and 5 columns, all of type int64, with no missing values.

Summarizing Statistics with .describe()

print(df.describe())

Execution Result (sample):

StudentID Age MathScore EnglishScore ScienceScore

count 1000.00000 1000.000000 1000.000000 1000.000000 1000.000000

mean 500.50000 23.944000 74.393000 74.348000 74.189000

std 288.81944 3.430000 14.367000 14.565000 14.442000

min 1.00000 18.000000 50.000000 50.000000 50.000000

25% 250.75000 21.000000 62.000000 62.000000 62.000000

50% 500.50000 24.000000 75.000000 74.000000 74.000000

75% 750.25000 27.000000 87.000000 87.000000 87.000000

max 1000.00000 29.000000 99.000000 99.000000 99.000000

.describe() provides a quick overview of the distribution of numerical columns, including the mean, standard deviation, quartiles, and extremes. This is especially useful during exploratory data analysis (EDA).

Summary and Interpretation

The DataFrame is the core data structure of Pandas and represents data in a tabular format with labeled rows and columns.

In this section, we explored three common ways of creating a DataFrame: from a Python dictionary, from a list of dictionaries, and by importing a CSV file.

These methods demonstrate the flexibility of Pandas in handling both small, manually created datasets and large external data files.

We also introduced essential inspection methods:

-

.head()allows a quick look at the first few rows of the dataset, making it easy to verify that the data has been read correctly. -

.info()provides a concise summary of the dataset, including the number of entries, data types, and presence of missing values. -

.describe()generates basic statistical measures such as mean, standard deviation, and quartiles, which are invaluable during the early stages of exploratory data analysis (EDA).

Through these tools, students should recognize that Pandas is not merely a container for data but a practical framework for data exploration, cleaning, and preparation. The ability to quickly construct, inspect, and summarize a dataset is fundamental in machine learning workflows, as it directly impacts the quality of subsequent analysis and modeling.

Indexing

Concept of Index in Series

In Pandas, a Series is essentially a one-dimensional array of values accompanied by an index.

The index serves as a label that uniquely identifies each element in the Series.

By default, Pandas assigns an integer index starting from 0. However, users can define custom labels, such as strings or dates, which makes data more descriptive and easier to access.

Example:

-

Values:

[10, 20, 30] -

Default index:

[0, 1, 2] -

Custom index:

['A', 'B', 'C']

This design allows flexible access. For instance, series[0] retrieves the first element by position, while series['A'] retrieves it by label.

Concept of Index in DataFrame

A DataFrame extends this concept into two dimensions, with both row indices and column labels.

-

Row index: Identifies each record (row). By default, this is an integer sequence (0, 1, 2, …).

-

Column index: Identifies each variable (column) and is typically defined by the dataset’s header or assigned during creation.

This dual indexing system makes DataFrames highly versatile. For example:

-

Row access by index label (

df.loc[0]for the first row). -

Column access by name (

df['Age']for the Age column). -

Combined access by row and column (

df.loc[0, 'Age']).

Importance of Indexing

The explicit separation of values and indices in both Series and DataFrames provides several benefits:

-

Data alignment: Operations between Series or DataFrames automatically align based on index labels.

-

Readability: Indices provide meaningful labels, reducing ambiguity compared to raw numerical arrays.

-

Flexibility: Users can reassign, reset, or set new indices (e.g., using a unique identifier as the row index).

-

Efficiency: Indexing allows fast and direct access to subsets of data, which is essential in large-scale preprocessing.

In summary, the index in Pandas is more than a positional reference; it is a labeling mechanism that provides structure, clarity, and power to data manipulation in both Series and DataFrames.

Label-based Indexing with .loc

The .loc accessor selects rows and columns using their explicit labels.

-

Rows are identified by their index labels.

-

Columns are identified by their names.

-

Official Doc: https://pandas.pydata.org/docs/reference/api/pandas.DataFrame.loc.html

import pandas as pd

data = {

"Name": ["Alice", "Bob", "Charlie"],

"Age": [25, 30, 35],

"Score": [85, 90, 95]

}

df = pd.DataFrame(data, index=["S1", "S2", "S3"])

# Access by label

print(df)

print("*****" * 5)

print(df.loc["S1"]) # Entire row with label "S1"

print("*****" * 5)

print(df.loc["S2", "Age"]) # Value in row "S2", column "Age"

print("*****" * 5)

print(df.loc[["S1", "S3"], "Name"]) # Subset of rows and one column

Execution Result:

Name Age Score

S1 Alice 25 85

S2 Bob 30 90

S3 Charlie 35 95

*************************

Name Alice

Age 25

Score 85

Name: S1, dtype: object

*************************

30

*************************

S1 Alice

S3 Charlie

Name: Name, dtype: object

Interpretation

-

.loc["S1"]retrieves the row labeled "S1". -

.loc["S2", "Age"]retrieves the"Age"value for row"S2". -

Lists of labels can be passed to extract multiple rows or columns.

Position-based Indexing with .iloc

While .loc uses labels to access rows and columns, the .iloc accessor is purely integer position-based.

It works like Python list slicing, where both rows and columns are referenced by zero-based indices.

Example

import pandas as pd

data = {

"Name": ["Alice", "Bob", "Charlie"],

"Age": [25, 30, 35],

"Score": [85, 90, 95]

}

df = pd.DataFrame(data, index=["S1", "S2", "S3"])

# Access by label

print(df)

print("*****" * 5)

print(df.iloc[0]) # First row

print("*****" * 5)

print(df.iloc[1, 1]) # Second row, second column

print("*****" * 5)

print(df.iloc[[0, 2], 0]) # First and third rows, first column

Execution Result:

Name Alice

Age 25

Score 85

Name: 0, dtype: object

30

0 Alice

2 Charlie

Name: Name, dtype: object

Interpretation

-

df.iloc[0]returns the first row of the DataFrame. -

df.iloc[1, 1]returns the element at row position 1 and column position 1 (30 in this example). -

df.iloc[[0, 2], 0]returns the values of the first and third rows from the first column ("Alice","Charlie").

Unlike .loc, .iloc does not recognize labels; it is always based on numerical positions.

This makes .iloc especially useful when performing algorithmic selection or slicing, such as iterating over specific row ranges.

Differences between Label-based Indexing (.loc) and Position-based Indexing (.iloc)

Pandas provides two complementary ways to access data: .loc and .iloc.

Although both are used for selecting rows and columns, they operate on fundamentally different principles.

1. Basis of Selection

-

.loc: Uses labels (row index names and column names). -

.iloc: Uses integer positions (0-based index, like Python lists).

2. Inclusion Rules in Slicing

.loc: Both the start and end labels are inclusive.

df.loc["S1":"S3"] # includes rows S1, S2, and S3

.iloc: The end position is exclusive, following Python slicing rules.

df.iloc[0:3] # includes rows at positions 0, 1, and 2

Typical Use Cases

-

.loc-

More intuitive when datasets have meaningful labels.

-

Commonly used when accessing data by identifiers such as student IDs, dates, or names.

-

-

.iloc-

Useful when working with purely positional logic.

-

Preferred in loops, algorithmic selection, or when labels are not known in advance.

-

Example Comparison

# Sample DataFrame

import pandas as pd

df = pd.DataFrame({

"Name": ["Alice", "Bob", "Charlie"],

"Age": [25, 30, 35]

}, index=["S1", "S2", "S3"])

print(df.loc["S2", "Age"]) # Access by label

print(df.iloc[1, 1]) # Access by position

Execution Result:

30

30

Key Distinction

-

.loc→ Think in terms of what the label says. -

.iloc→ Think in terms of where the data is located.

In summary, .loc is label-oriented, while .iloc is position-oriented.

Choosing between them depends on whether you are working with descriptive labels or numerical positions.

Boolean Indexing (Conditional Selection)

Boolean indexing allows selecting rows in a DataFrame based on conditions applied to column values.

Instead of referencing labels or positions, we create a Boolean mask—a series of True/False values—that determines which rows are included in the result.

Example

import pandas as pd

data = {

"Name": ["Alice", "Bob", "Charlie", "Dana"],

"Age": [25, 30, 35, 28],

"Score": [85, 90, 65, 72]

}

df = pd.DataFrame(data)

# Select rows where Age is greater than 28

print(df[df["Age"] > 28])

# Select rows where Score is at least 80

print(df[df["Score"] >= 80])

# Combine conditions with & (and), | (or)

print(df[(df["Age"] > 25) & (df["Score"] > 70)])

Boolean Indexing (Conditional Selection)

Boolean indexing allows selecting rows in a DataFrame based on conditions applied to column values.

Instead of referencing labels or positions, we create a Boolean mask—a series of True/False values—that determines which rows are included in the result.

Example

import pandas as pd

data = {

"Name": ["Alice", "Bob", "Charlie", "Dana"],

"Age": [25, 30, 35, 28],

"Score": [85, 90, 65, 72]

}

df = pd.DataFrame(data)

# Select rows where Age is greater than 28

print(df[df["Age"] > 28])

# Select rows where Score is at least 80

print(df[df["Score"] >= 80])

# Combine conditions with & (and), | (or)

print(df[(df["Age"] > 25) & (df["Score"] > 70)])

Execution Result:

Name Age Score

2 Charlie 35 65

3 Dana 28 72

Name Age Score

0 Alice 25 85

1 Bob 30 90

Name Age Score

1 Bob 30 90

3 Dana 28 72

Interpretation

-

df[df["Age"] > 28]selects all rows where the value in"Age"is greater than 28. -

df[df["Score"] >= 80]returns only students with scores of 80 or higher. -

Multiple conditions can be combined using logical operators:

-

&for AND -

|for OR -

~for NOT

-

Boolean indexing is powerful for filtering subsets of data based on logical conditions and is a fundamental tool in preprocessing steps for machine learning.

Resetting and Setting Index

In Pandas, the index is flexible and can be reset or reassigned to better reflect the structure of the dataset.

Two useful methods are:

-

reset_index(): Resets the existing index to the default integer index. The old index can be preserved as a column if needed. -

set_index(): Assigns one of the columns to become the new index.

Example

Example

import pandas as pd

data = {

"StudentID": [101, 102, 103],

"Name": ["Alice", "Bob", "Charlie"],

"Score": [85, 90, 95]

}

df = pd.DataFrame(data)

# Set "StudentID" as the index

df_indexed = df.set_index("StudentID")

print("DataFrame with StudentID as index:")

print(df_indexed)

# Reset index back to default integers

df_reset = df_indexed.reset_index()

print("\nDataFrame after reset_index():")

print(df_reset)

Execution Result:

DataFrame with StudentID as index:

Name Score

StudentID

101 Alice 85

102 Bob 90

103 Charlie 95

DataFrame after reset_index():

StudentID Name Score

0 101 Alice 85

1 102 Bob 90

2 103 Charlie 95

Interpretation

set_index("StudentID")makes the"StudentID"column the new index, which can be useful when each student has a unique identifier.reset_index()restores the default integer index (0, 1, 2) and moves"StudentID"back into the columns.- These methods provide flexibility when reorganizing data, especially when preparing datasets for merging, joining, or exporting.

Selection

Selecting a Single Column

A single column can be selected by using its column label.

The result is a Series.

import pandas as pd

data = {

"Name": ["Alice", "Bob", "Charlie"],

"Age": [25, 30, 35],

"Score": [85, 90, 95]

}

df = pd.DataFrame(data)

# Select a single column

print(df["Name"])

Execution Result:

0 Alice

1 Bob

2 Charlie

Name: Name, dtype: object

Selecting Multiple Columns

Multiple columns can be selected by passing a list of column labels.

The result is a DataFrame.

print(df[["Name", "Score"]])

Execution Result:

Name Score

0 Alice 85

1 Bob 90

2 Charlie 95

Selecting Rows and Columns Together

Both rows and columns can be selected simultaneously using .loc (label-based) or .iloc (position-based).

# Select the "Score" of the first two students

print(df.loc[0:1, "Score"])

# Or equivalently by position

print(df.iloc[0:2, 2])

Execution Result:

0 85

1 90

Name: Score, dtype: int64

0 85

1 90

Name: Score, dtype: int64

Slicing Examples

Slicing can be used for row ranges or column ranges.

# Slice rows

print(df[0:2])

# Slice rows and columns together

print(df.loc[0:2, ["Name", "Age"]])

Execution Result:

Name Age Score

0 Alice 25 85

1 Bob 30 90

Name Age

0 Alice 25

1 Bob 30

2 Charlie 35

Useful Practice Items

-

Select the

"Score"column from the DataFrame. -

Select the

"Name"and"Age"columns together. -

Retrieve the

"Age"of the student named"Bob". -

Extract the rows corresponding to the first and third students, but only the

"Name"column.

Handling Missing Data

Using dropna() and fillna()

Real-world datasets often contain missing values, which must be addressed before applying machine learning models. Pandas provides simple methods to handle missing data.

dropna(): Removes rows or columns that contain missing values.fillna(): Replaces missing values with a specified constant or a computed value (e.g., mean, median).

Example Dataset

import pandas as pd

import numpy as np

data = {

"Name": ["Alice", "Bob", "Charlie", "Dana"],

"Age": [25, np.nan, 35, 28],

"Score": [85, 90, np.nan, 72]

}

df = pd.DataFrame(data)

print(df)

Execution Result:

Name Age Score

0 Alice 25.0 85.0

1 Bob NaN 90.0

2 Charlie 35.0 NaN

3 Dana 28.0 72.0

Using dropna()

# Remove rows with any missing values

df_drop = df.dropna()

print(df_drop)

Execution Result:

Name Age Score

0 Alice 25.0 85.0

3 Dana 28.0 72.0

Interpretation

dropna() removed rows 1 and 2 because they contained missing values.

Using fillna()

# Replace missing values with 0

df_fill_zero = df.fillna(0)

print(df_fill_zero)

# Replace missing values with the mean of the column

df_fill_mean = df.fillna(df.mean(numeric_only=True))

print(df_fill_mean)

Execution Result (fill with 0):

Name Age Score

0 Alice 25.0 85.0

1 Bob 0.0 90.0

2 Charlie 35.0 0.0

3 Dana 28.0 72.0

Execution Result (fill with mean):

Name Age Score

0 Alice 25.000000 85.000000

1 Bob 29.333333 90.000000

2 Charlie 35.000000 82.333333

3 Dana 28.000000 72.000000

Interpretation

-

fillna(0)replaces all missing values with 0. -

fillna(df.mean())replaces missing values with the mean of each column. -

Choice of method depends on the context of the data and the machine learning task.

Practice Exercise

-

Load a dataset containing missing values.

-

Use

dropna()to remove rows with missing data. -

Use

fillna()to replace missing values with a constant (e.g., -1). -

Use

fillna()with column means to impute missing values. -

Compare the results and discuss which method is more appropriate in each case.

Basic Operations

Summary Statistics

Pandas provides convenient methods to quickly compute summary statistics for numerical data.

import pandas as pd

data = {

"Name": ["Alice", "Bob", "Charlie", "Dana"],

"Age": [25, 30, 35, 28],

"Score": [85, 90, 95, 72]

}

df = pd.DataFrame(data)

# Summary statistics

print(df["Score"].mean()) # Mean

print(df["Score"].max()) # Maximum

print(df["Score"].min()) # Minimum

print(df.describe()) # Comprehensive summary

Execution Result (sample):

85.5

95

72

Age Score

count 4.000000 4.000000

mean 29.500000 85.500000

std 4.203173 9.574271

min 25.000000 72.000000

25% 27.250000 81.750000

50% 29.000000 87.500000

75% 31.250000 91.250000

max 35.000000 95.000000

Interpretation

-

mean,max,minreturn single values. -

.describe()provides count, mean, std, min, quartiles, and max for quick overview.

Using groupby

The groupby() method allows aggregation of data based on categories.

import pandas as pd

data = {

"Department": ["Math", "Math", "CS", "CS", "Physics"],

"Student": ["Alice", "Bob", "Charlie", "Dana", "Evan"],

"Score": [85, 90, 95, 72, 88]

}

df = pd.DataFrame(data)

# Average score by department

print(df)

print("*****" * 3)

print(df.groupby("Department")["Score"].mean())

Execution Result:

Department Student Score

0 Math Alice 85

1 Math Bob 90

2 CS Charlie 95

3 CS Dana 72

4 Physics Evan 88

*************************

Department

CS 83.5

Math 87.5

Physics 88.0

Name: Score, dtype: float64

Interpretation

-

Groups are formed by the values in the

"Department"column. -

For each group, the mean of

"Score"is calculated. -

groupbyis essential for analyzing datasets with categorical variables.

Using merge

The merge() function combines two DataFrames based on a common key, similar to SQL joins.

students = pd.DataFrame({

"StudentID": [1, 2, 3],

"Name": ["Alice", "Bob", "Charlie"]

})

scores = pd.DataFrame({

"StudentID": [1, 2, 3],

"Score": [85, 90, 95]

})

# Merge on StudentID

df_merged = pd.merge(students, scores, on="StudentID")

print(df_merged)

Execution Result:

StudentID Name Score

0 1 Alice 85

1 2 Bob 90

2 3 Charlie 95

Interpretation

-

pd.merge()combines two DataFrames on a shared column (StudentID). -

Default behavior is an inner join; other join types (

left,right,outer) can be specified with thehowparameter. -

This operation is crucial when integrating data from multiple sources.

Homeworks

Practice Problems

-

Load the dataset

05_random_students.csvand display the first 10 rows. Hint: Use.head(10). -

Calculate the average

MathScorefor students older than 25. Hint: Apply Boolean indexing with a condition on the"Age"column. -

Use

groupbyto compute the averageScienceScoreby age group (e.g., students aged 18–22, 23–26, 27–30). Hint: Create a new column for age groups before grouping. -

Merge two DataFrames: one containing student IDs and names, and another containing student IDs and their

EnglishScore. Hint: Usepd.merge()with"StudentID"as the key.

Instruction

-

Write your solutions as Python code cells.

-

Test each solution and verify correctness.

-

Submit only the

.ipynbfile or.pyscript containing your code. -

Solutions will be provided in a separate file for review.

-

Solutions: click me